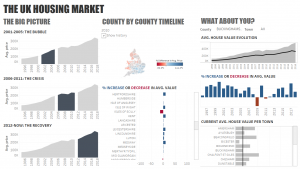

A few days back I joined a webinar by the latest Tabelau Iron Viz champion, Tristan Guillevin. Tristan created a dashboard to analyze US housing market using a summarized data set. I decided to replicate it using UK House Price Paid data that I got from the HM Land Registry. However the data I used is more granular and is around 3 GB in size. Tableau is able to handle the data of that size, but for this blogpost I have used AWS Athena as the data souce that Tableau connects to. The Dashboard looks like this:

There are three parts to this:

- Convert the 3 GB source csv file into parquet format* and store it in S3 using PySpark.

- Create a table in AWS Athena that points to the parquet file created in previous step.

- Create a dashboard in Tableau using AWS Athena as the source.

* Note: Converting to Parquet is optional and you can use the default 3 GB source file, but you will end up paying a lot more for the queries that Tableau runs on Athena and the dashboard will be a bit slower in repsonse to parameter changes.

1. Use PySpark to convert the CSV file into Parquet format

The first step is to convert the CSV file into columnar Parquet format to improve query performance and reduce cost. The PySpark code also adds column headers (missing in the source file); removes extra columns not needed for analysis; partitions the data by year. A sample Jupyter notebook that achieves this is given below:

2. Create a table in AWS Athena

In this step we will create a table in Athena using the Parquet SerDe. A SerDe (Serializer/Deserializer) is a way in which Athena interacts with data in various formats. It is the SerDe you specify, and not the DDL, that defines the table schema. In other words, the SerDe can override the DDL configuration that you specify in Athena when you create your table. If you want to learn more about using a SerDe with Athena then follow this link to AWS documentation.

Use the CREATE TABLE statement to create an Athena table from the underlying CSV file stored in Amazon S3 in Parquet.

CREATE EXTERNAL TABLE price_paid( transaction_id string, price bigint, date_of_transfer timestamp, postcode string, property_type string, old_or_new string, duration string, paon string, saon string, street string, locality string, town_city string, district string, county string, ppd_category string ) PARTITIONED BY (year int) STORED AS PARQUET LOCATION 's3://open_data-uk/land-registry/processed-data/parquet/pp-complete/' tblproperties ("parquet.compress"="SNAPPY");

Then run the MSCK REPAIR TABLE statement on the table to refresh partition metadata.

MSCK REPAIR TABLE price_paid;

3. Create a dashboard in Tableau using AWS Athena as the source

The next step is to connect Tableau to your AWS AThena table. I used tableau version 10.4 to create the dashboard and this version includes the AWS Athena driver. If you are using 10.3 or earlier you might have to download the Athena JDBC Driver (download here) .

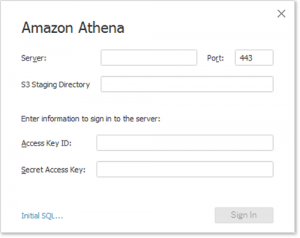

Connecting to Athena in Tableau is very simple, just select The “Connect –> To a Server –> More… –> Amazon Athena” from the menu. Tableu will then pop-up a dialog box that looks like this:

You will need the following information from your AWS Athena account:

- Server: athena.<region>.amazon.com

for example, athena.eu-west-2.amazonaws.com

- Port: 443

SSL by default - Amazon S3 Staging Directory: Athena requires a staging bucket to store results. The default bucket is s3://aws-athena-query-results-<account_number>-<region>/

- Username/Password: Access Key ID and Secret Access Key

Access Key ID and Secret Access Key associated with the IAM user you are logging in as

For more details on connecting Tableau with Athena click here.

Once you have connected Tableau to Athena you can start creating the dashboard like you would for any other data source. The webinar by the latest Tabelau Iron Viz champion, Tristan Guillevin is a good starting point.

I was not able to upload the dashboard to the Tableau Public server as the public server only works with data sources with less than 15 million rows. However I have uploaded the Tableau workbook as a packaged workbook that you can download and play around with (click here).

With thanks! Valuable information!